How fast is rules_js? Why is it better than rules_nodejs? How does it compare to non-Bazel npm tools?

If you're reading this you may be considering using rules_js for a new project, migrating your existing project to Bazel with rules_js, or switching your existing project from rules_nodejs to rules_js. If so, you've come to the right place. rules_js was designed from the ground up with performance in mind. The foundational piece to a performant Bazel rule set for javascript is fast fetching & linking of npm dependencies, which is what we'll be measuring here.

In this benchmark we will compare the performance of fetching, linking, and running tools with rules_js against the competition. We'll compare the following tools & rules,

- yarn

- npm

- pnpm

- rules_nodejs yarn_install

- rules_nodejs npm_install

- rules_js npm_translate_lock

We've chosen a package.json from elastic/kibana as representative large Node.js monorepo. This package.json has approximately 3750 downloaded npm packages in its transitive closure.

We will consider

node_modulesstyle linking only in this benchmark. Yarn's plug'n'play linking style isn't widely supported at this time. Plug'n'play linking is very fast since it doesn't need to layout anode_modulestree on disk, however, it isn't a useful comparison for most users since it doesn't work well with many tools in the ecosystem.

Considerations

There are a few important things to keep in mind when benchmarking npm package management tools so we don't compare apples to oranges.

Fetching from registry vs. using local caches

When running a package manager for the first time on a project you'll likely need to fetch 3rd party packages from somewhere external, usually from the yarn or npm registries. On the first fetch, these packages are stored locally in your npm, yarn, pnpm and bazel caches so the next time you run the same command the cache will be used and the command will run much faster.

We'll compare both fetching from the internet and using locally cached packages in this benchmark as both are common scenarios users will encounter.

Unlike yarn & npm which cache npm package archives, pnpm's cache is a very performant per-file CAS (content addressable store) under the hood. This gives pnpm a slight advantage compared to the other package managers for time to pull packages from its local cache.

Bazel's external repository cache (better thought of as a downloader cache), also caches the downloaded npm package archives. rules_js makes use of Bazel's external repository cache for caching downloaded npm package archives. Although pnpm can beat rules_js in full linking time when pulling from the cache, rules_js adds the ability to lazy fetch and lazy link which will make it faster than pnpm for many common workflows.

Bazel rules use npm, yarn and pnpm lockfiles

The yarn_install, npm_install and npm_translate_lock Bazel rules use pre-generated yarn, npm and pnpm locks files respectively. Users will typically generate the lockfiles outside of bazel using the package managers directly.

We will benchmark how long it take to generate lockfiles with yarn, npm and pnpm since Bazel users will need to run these tools to generate & update their lockfiles.

For all direct comparisons of package managers against Bazel rules, lockfiles will have been pre-generated.

Configuration

These benchmarks were run on a MacBook Pro (16-inch 2019), 2.4 GHz 8-Core Intel Core i9, 64 GB 2667 MHz DDR4 running macOS Monterey 12.3.1

Internet throughput (relevant for fetching dependencies from the registry) was approximately 60 Mbps download & 25 Mbps upload.

Versions of package managers used were,

- pnpm 7.1.7

- npm 8.11.0

- yarn 1.22.19

Versions of package managers used by Bazel npm_install and yarn_install rules, fetch hermetically by Bazel, were,

- npm 8.5.5

- yarn 1.22.11

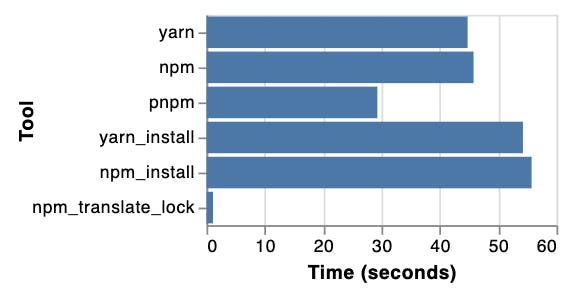

Lockfile generation / dependency resolution

Lets start by measuring how long lockfile generation (also known as dependency resolution) takes with yarn, npm and pnpm. Bazel doesn't come into this comparison since the Bazel package management rules use pre-generated lockfiles.

We run each tool twice; once with an empty cache and once with its cache populated from the previous run. In both cases there is no lockfile pre-generated and no node_modules folder present.

pnpm is the fastest of the three tools for generating lockfiles in part because it has a --lockfile-only flag that we set so that it can generate a lockfile without linking a node_modules folder.

Since npm and yarn always link a node_modules tree we can't tell how much time just the lockfile generation takes with these tools. However, since neither npm or yarn have a --lockfile-only flag, that measurement is not useful since to generate a lockfile, a user will be forced to also link a node_modules tree with these tools.

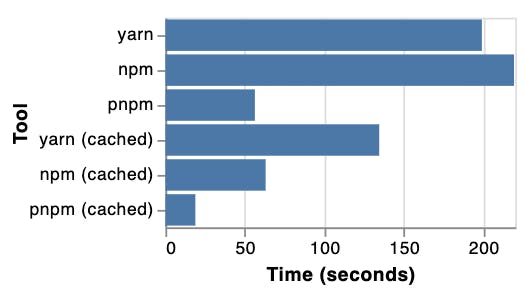

Linking node_modules with lockfile pre-generated

With a pre-generated lockfile, we can bring Bazel rules into the comparisons.

The yarn_install and npm_install rules from rules_nodejs have been around for many years. These rules run the yarn and npm package managers under the hood and rely on them for fetching and linking. Caching is handled by yarn and npm as well for these rules. As such, yarn_install and npm_install do not offer performance improvements over running these package managers outside of Bazel.

The npm_translate_lock rule from the new rules_js rules, on the other hand, does not use any package managers under the hood. It consumes a pre-generated pnpm lockfile but internally it uses pure Bazel to fetch npm dependencies and to link the node_modules tree. Since fetching is handled by Bazel, npm packages fetched from the registry are cached in Bazel's external repository cache.

Since pnpm lockfiles can be generated faster than yarn and npm lockfiles, as seen above, the npm_translate_lock rule also has the least amount of lockfile generation overhead of the Bazel rules measured, which is a pre-req to fetching, linking and building.

Linking with rules_js using npm_translate_lock is beat only by linking with pnpm itself. As we'll see shortly, however, that is not the whole story as rules_js still has a few other tricks up its sleeve.

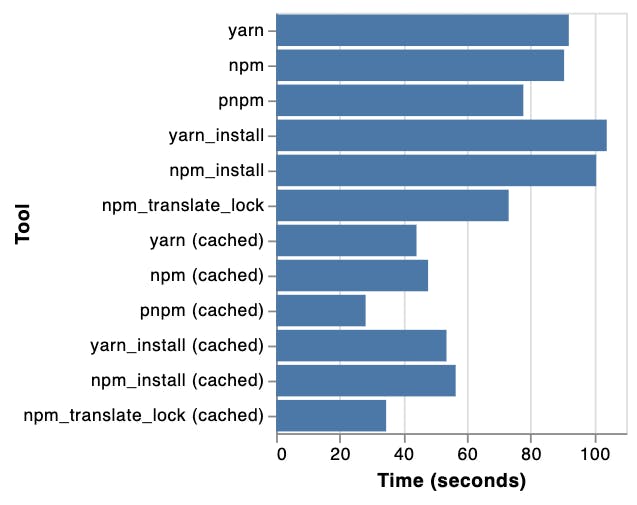

Incremental node_modules linking

One of the major deficiencies in the npm_install and yarn_install rules from rules_nodejs is that they don't support incremental node_modules linking. On any change to either the package.json or the lockfile, the external repository where the node_modules is located is invalidated and the entire node_modules tree must be re-linked with the same long install times seen above.

This is a major performance penalty for both local development and CI. Locally you can hit this often when switching branches and rebasing in repositories with a large number of 3rd party npm deps. Some developers will go so far as to change their local workflows to avoid this penalty as much as possible. On CI, persistent workers for change sets and landed commits can also hit this often, leading to slow CI times even on trivial changes.

With the npm_translate_lock rule from rules_js, we set out to resolve this deficiency from the start. We chose to use the pnpm lockfile format because it allows for both fetching npm packages individually from the registry with Bazel's downloader and for incrementally linking the node_modules tree with fine grained outputs.

To illustate this we start with a full install to fetch, populate the cache & link node_modules. We then make an arbitrary change to a package.json dependency (in this case upgrade chai@3.5.0 to chai@4.3.6) and re-run install. For the Bazel rules we first re-generate the lockfile, which is not included in the measurement. This scenario would be similar to rebasing to HEAD and getting an updated package.json & lockfile from remote on the rebase.

Unlike yarn_install and npm_insall which must re-link the entire node_modules tree when there are any changes to dependencies, npm_translate_lock from rules_js matches the incremental linking performance web developers are used to outside of Bazel.

We feel this improvement will get more web developers on board with building with Bazel as their local workflow build times won't regress as they can with rules_nodejs. This is especially true at companies with large monorepos and/or a large set of npm dependencies.

rules_js, like pnpm, links a symlinked node_modules structure which allows for incremental and lazy fetching and linking with Bazel. Not all tools currently work with this linking style, however, many major ones do and and many open source projects such as Next.js, Vue and Vite have already migrated to pnpm and are compatible with this linking style. There are also a growing number of tools in the ecosystem with pnpm support: pnpm.io/community/tools.

We plan to add support for yarn & npm to rules_js post 1.0. The yarn & npm rules won't support incremental linking or lazy fetching and linking since, like in rules_nodejs, they will just run the

yarnandnpmpackage managers under the hood. These rules are meant to be used for migrations from rules_nodejs to rules_js so you can migrate to rules_js without switching your package manager. Once on rules_js, the switch to pnpm to unlock incremental and lazy fetching and linking can be tackled separately.For projects that are unable to switch to pnpm for lockfile generation and/or are not compatible with the symlinked

node_modulesstructure, the yarn & npm rules can also be used to migrate to Bazel with rules_js, however, the recommended approach is to first migrate to pnpm outside of Bazel and then migrate to Bazel with rules_js so your DX for npm dependencies does not regress.

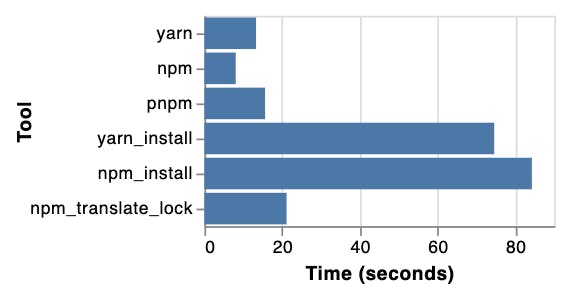

Lazy fetching and linking

Of all the package management solutions in this benchmark, rules_js alone allows for lazy fetching and lazy linking of npm dependencies. This is a feature that Bazel's action graph allows for that npm, yarn and pnpm are not able to reproduce and rules_nodejs was not designed to do.

Unlike npm, yarn, pnpm, npm_install and yarn_install, which must link the entire node_modules tree, rules_js using npm_translate_lock is able to fetch and link only the subset of the node_modules tree that is required for building and/or running one or more targets.

To illustate this we start with populated caches and no node_modules folder linked. For each tool we'll do the bare minimum to run the "uuid" npm package CLI tool, which is pulled in as a direct dependency.

For npm, yarn and pnpm, we'll have to fully link and then run ./node_modules/.bin/uuid.

For npm_install and yarn_install, we will run the nodejs_binary target with bazel run //:uuid_bin.

For npm_translate_lock, we will run the js_binary target with bazel run //:uuid_bin.

Only js_binary from rules_js is able to fetch and link only the uuid npm package and its transitive dependencies to run the tool. The same principle of lazy fetching and linking holds for all rules_js-based build and test targets: you only need to fetch and link the npm dependencies that are required for the targets your are building and testing.

In a large monorepo with many projects sharing a package.json or a workspace of many package.json files, this can lead to significant time savings by not having to fetch & link npm dependencies whenever there are changes to npm dependencies that don't affect you. Bazel will determine which changes affect you so you don't have to.