Photo by Hush Naidoo Jade Photography on Unsplash

Diagnosing Bazel Cache Misses

Part 1. Repository Rules

Are you building with Bazel but having caching woes for a particular test target? Perhaps it's not getting as many cache hits as you'd expect, or even worse, none at all!

Fear not! In this blog series, we are going to take a look at a number of different ways that we can investigate and get to the bottom of these cache misses. We'll also walk through a few of the common patterns to help fix troublesome files.

In this post, we'll focus on looking at repository rule determinism and one of the ways we can query for file differences between executions.

Deterministic Repository Rules

Firstly, let's define what we mean by a deterministic repository rule.

Given the same setup and install of a dependency, we should get the same output.

Rules such as http_archive and http_file are always going to be deterministic. They download a file, check it against a checksum and expose targets to access the resulting downloaded file.

However, for repository rules that may call out to package managers, such as pip, npm or cargo (to name a few), we can not guarantee that the output of the install process is consistent.

While these package managers have lock files which ensure our direct and transitive dependencies are pinned (ie, we get the same set of dependencies each time), what they don’t tell us is if a package itself is going to run some sort of "post install" script or process. For example, it’s quite common for Python distributions to build a native extensions from source during install, and we may end up with differing .so files each time. As this happens during the repository rule run, the output of this process can then become inputs to the rest of the build, causing the cache misses and unnecessary rebuilds that we are experiencing.

Example: Python wheels compiled from source distribution

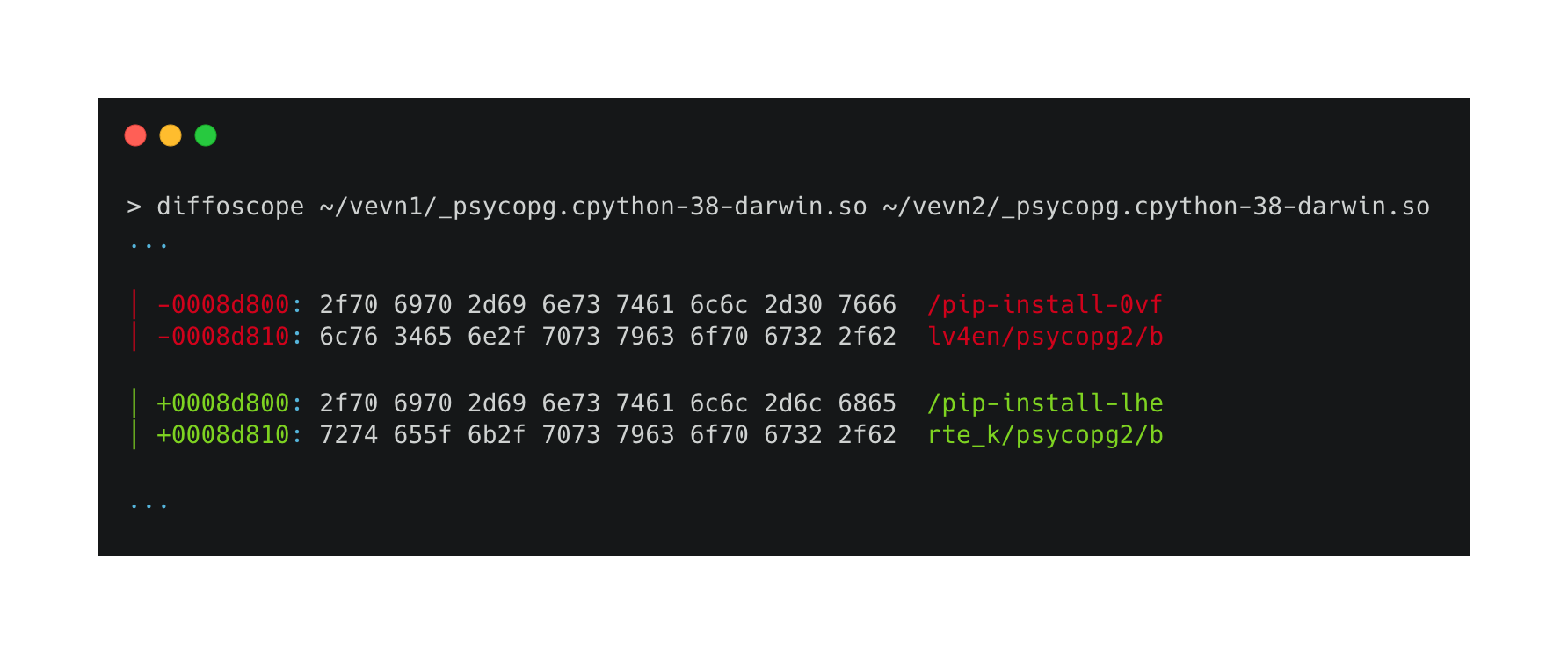

The example below shows the output of two separate pip install commands, where psycopg2 was built from source.

From diffing the .so files here with diffoscope, it's clear that when building the wheel and other artifacts, the absolute path to a temporary directory that pip uses has made its way into the resulting artifacts. This then becomes an input file to our build graph, resulting in cache misses both locally and on CI.

As previously mentioned, there are a few methods to help find these differences. One method we can use is querying for the dependencies of the target in question, getting paths to the files, hashing them and diffing the resulting shasums over two runs of the query (seperated with a bazel clean --expunge). We will be using the command below to generate the shasum file. Make sure to replace //foo with the target that you are investigating.

bazel query "kind('source file', deps(//foo))" --output xml |

xq '.query."source-file"[]."@location"' --raw-output |

awk -F ':' '{print $1}' |

sort |

xargs shasum -a 256 {} |

tee shas.txt

Some parts of this are optional, eg the use of tee, but it can be useful to see what is being printed in some cases.

The

xqtool is included withyq, see kislyuk.github.io/yq/#xml-support for more info.

Now that we have our two lists of shasums for each input file, we can work on applying the necessary fixes. In some cases the solution may be simply to exclude those files from the inputs to the build graph, (perhaps in the case of leftover temporary files from invoking another build tool in a post install), other cases may require slightly more complex fixes.

We will dive into those cases further in a later blog post in this series.